The AI Juggernaut, Or: NVIDIA Is Almost “AI Diva” Spelled Backwards

NVIDIA’s AI strategy going forward is fairly simple and the company does not face the same question that is facing the Sexy 6 (which is how will they monetize AI?). GPUs will continue to be the most sought-after chips for AI processing.

Cody is going to be in San Francisco this week (giving the keynote and other talks Wednesday at the MoneyShow), and would be happy to meet up with some subscribers in SF, so if interested, please write us back.

On that note, if anyone knows any robotics companies based in SF that Cody can meet up with during the week, please shoot us an email about that too. Thank you in advance.

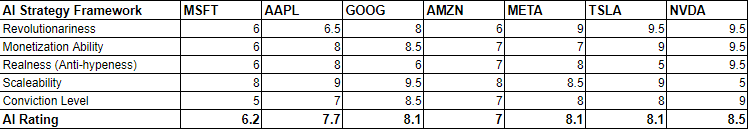

This is Part 3 of a series of articles breaking down and ranking the AI strategies of the Magnificent 7.

“And so that became the company’s mission, to go build a computer, the type of computers and solve problems that normal computers can’t, and to this day we’re focused on that. And if you look at all the problems in the markets that we opened up as a result, it’s things like computational drug design, weather simulation, materials design. These are all things that we’re really, really proud of. Robotics, self-driving cars, autonomous software we call artificial intelligence. And then of course we drove the technology so hard that eventually the computational cost went to approximately zero. And it enabled a whole new way of developing software, where the computer wrote the software itself, artificial intelligence as we know it today. And so that was the journey.” — NVIDIA CEO Jenson Huang, March 5, 2024, speaking at Stanford Business School.

Going in order of current market capitalization, third up in our review of the Mag 7 is NVIDIA (NVDA). NVIDIA has been the AI story for most of the last year and a half. But before that, NVIDIA was known primarily for its graphics cards which shipped in high-end gaming PCs. That all changed in late 2022 with the unveiling of OpenAI’s ChatGPT in November of that year, which Trading With Cody subscribers learned about on day 1.

Perhaps more than anything else, ChatGPT showed us the potential of what AI could do. ChatGPT sparked an idea in the minds of nearly every CEO on the planet who all of a sudden realized that AI would be huge for their industry. More importantly, nearly every big tech company shifted its entire product roadmap to focus on AI.

NVIDIA builds a different kind of chip from traditional processors (which have traditionally made by the likes of Intel and AMD (which also makes GPUs)). NVIDIA builds graphics processing units (GPUs), that are great at performing millions of simple computations simultaneously. NVIDIA invented the GPU and today NVIDIA’s competitors also sell GPUs (also known as “accelerators” since they aren’t used for graphics anymore), but NVIDIA still controls the lion’s share of the GPU market. Here’s ChatGPT’s explanation of the difference between GPUs and CPUs, and how GPUs enable AI:

Accelerated computing with GPUs enables AI by leveraging their parallel processing capabilities and optimization for matrix operations. Unlike CPUs, which excel at sequential tasks, GPUs can handle multiple tasks simultaneously and perform matrix operations much faster, making them ideal for training and running AI models. This efficiency, scalability, and speed of GPUs significantly accelerate the development and deployment of AI applications compared to traditional CPU-based computing.

It did not take long for the market to realize that NVIDIA would be the first primary beneficiary of the world’s new fascination with AI. NVIDIA’s mission has always been to advance what it calls “accelerated computing,” and its processors were the key to unlocking the potential of AI. NVIDIA CEO Jensen Huang had been extremely early in seeing how important AI would be and had been “skating to where the puck was going” as Cody often says, quoting The Great One. When the ChatGPT moment happened, it was NVIDIA that was behind the curtains enabling the entire Revolution.

AI in its current form would not exist without NVIDIA. Jensen Huang and his team worked with some of the first AI researchers at Stanford (like Andrew Ng) to develop a domain-specific programming language to run deep learning algorithms (known as cuDNN). NVIDIA reportedly did not make any money developing cuDNN and at that time there was no known market for the technology.

NVIDIA’s early push in AI started to pay huge dividends when the Sexy 6 (excluding Apple) all decided that they had to start building and retrofitting their data centers with NVIDIA chips if they were to have a chance of keeping up in AI. Moreover, hundreds of AI startups started building AI applications that further increased the demand for AI compute. This translated to tens of billions of dollars of new revenue for NVIDIA and at very high gross margins. For most of the last eighteen months, demand for NVIDIA chips far exceeded the supply which allowed NVIDIA to sell these chips at much higher gross margins, raising the company’s overall gross margin from 62% in 2021 to 72% in 2023. NVIDIA’s annual revenue went from $16.6 billion to $60.9 billion in the same amount of time.

NVIDIA’s AI strategy going forward is fairly simple and the company does not face the same question that is facing the Sexy 6 (which is how will they monetize AI?). GPUs will continue to be the most sought-after chips for AI processing. As mentioned in the first article, the Sexy 6 will likely spend over $200 billion on Capex in 2024, and a good portion of that will be going to NVIDIA processors. For context, AMD predicts that its competitor product (the MI300 accelerators) will generate about $4 billion in revenue for the company this year. Intel’s Gaudi lineup of accelerators will do about $500 million in 2024. NVIDIA will likely earn close to $100 billion in data center revenue in 2024.

We expect NVIDIA will continue to push the envelope of physics and develop increasingly more powerful chips, that in turn empower better AI, which will be used by NVIDIA to build better chips. One of the most fascinating examples of the potential of AI is NVIDIA use of its chips and software to make better chips. NVIDIA worked with TSMC and Synopsys (which makes electronic design automation (EDA) software) to make better chips using AI. In short, NVIDIA’s H100s were combined with generative AI software to design better chips in a fraction of the time. NVIDIA reported that its new computational lithography program, cuLitho, accelerated the design process by 40-60x.

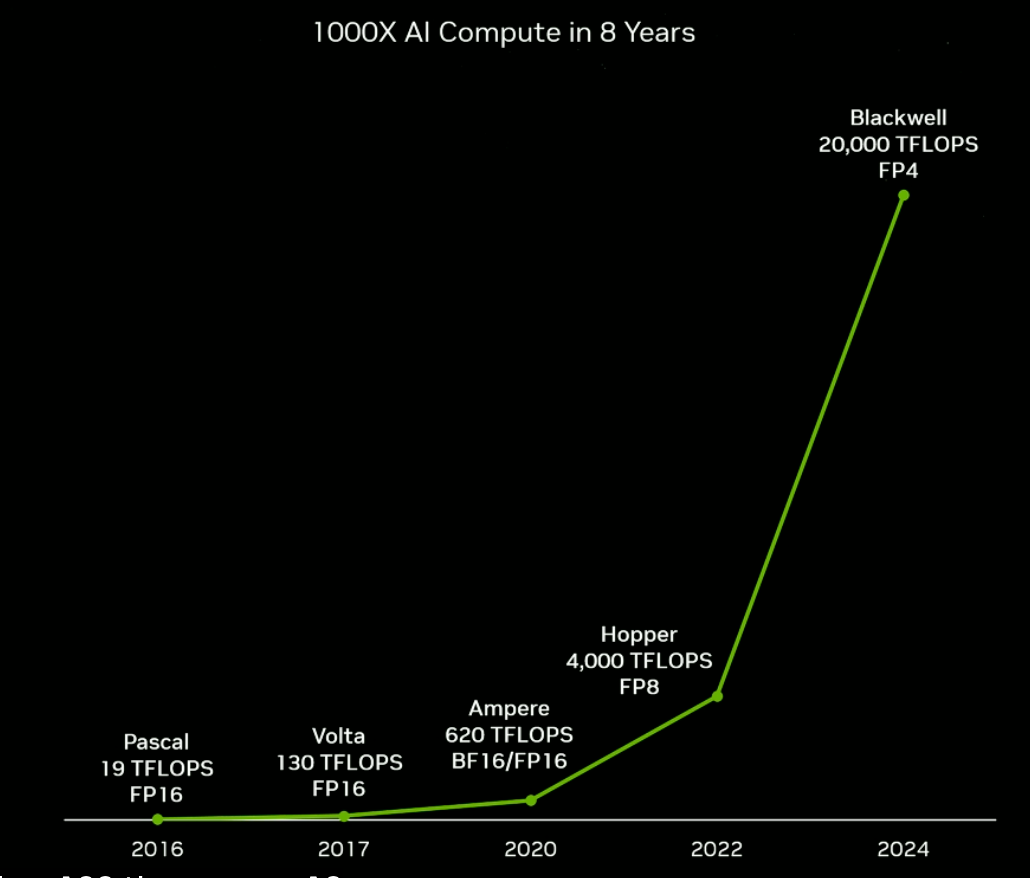

Using the very AI that their chips enable, NVIDIA has already accelerated the rate of improvement in their AI chips. Below is one of our favorite graphics from NVIDIA’s GTC conference earlier this year because it show how NVIDIA has taken Moore’s law to the next level. This is yet another example of how the Kurzweilian rate of change actually accelerates the rate of technological advancement.

Under Moore’s law, we would have expected a 36x increase in compute power in eight years. But NVIDIA accelerated that to 1000x in eight years because of its Revolutionary approach to building AI chips.

In addition to offering the best chips, one of the key factors of NVIDIA’s success is it’s platform approach. Jensen Huang described the company as a “technology platform company” that “exists in service to a bunch of other companies.” NVIDIA’s platform includes its comprehensive suite of software, including its Compute Unified Device Architecture (CUDA), which helps NVIDIA’s customers use its products. CUDA and its variants includes hundreds of libraries made for specific use cases. These libraries allow developers to work with NVIDIA chips more efficiently that manually coding all of the required code in a traditional language like C/C++. Having built this software ecosystem around its products further entrenches NVIDIA as the de facto standard for AI chips.

NVIDIA’s main threat at this point is probably not AMD, Intel, or any of the dozen or so chip startups that are trying to compete with it right now, but rather NVIDIA’s customers themselves. As we wrote about in our Amazon best-selling book, The Great Semiconductor Shift, the Sexy 6 are all working on developing and implementing their own custom chips. This is not in small part due to the rising cost and shortage of supply of the best NVIDIA chips. Google developed its own custom AI chip — the Tensor Processing Unit (TPU) and began using it internally in 2015, and then offered it to its cloud customers in 2018. Amazon built its first custom chip, Nitro, in 2013 and today it’s the highest-volume AWS chip. Meta and Microsoft are both building custom AI chips for their data centers. Tesla designed its custom Dojo AI System on Wafer (SoW) — which is a first of its kind and is now in volume production at TSMC — and will use it to train cars to drive themselves.

Despite massive investments from the Sexy 6 in their custom silicon efforts, they are still trying to get as many NVIDIA chips as they can. It turns out that when it comes to AI chips, it’s not “and” or “or,” it “both, and more.” The Sexy 6 will likely continue to focus heavily on developing their custom silicon programs, while simultaneously trying to get their hands on piles of NVIDIA H100s.

We rate NVIDIA’s AI strategy 8.5/10.

The company gets high marks for its Revolutionariness, Monetization Ability and Realness, since the AI dollars are already flowing to the bottom line in a big way. The only factor that we rate poorly is Scaleability, due to the limited size of the customer base and the risk that those customers eventually start using more of their own custom silicon or that from NVIDIA’s competitors if (and that’s a big if), they actually release a comparable product.